How to Read a Stirng of Html Into R

As an instance of how to extract information from a web page, consider the chore of extracting the spring baseball schedule for the Cal Bears from http://calbears.cstv.com/sports/k-basebl/sched/cal-m-basebl-sched.html.

Reading a web page into R

Read the contents of the folio into a vector of graphic symbol strings with thereadLines function:

> thepage = readLines('http://calbears.cstv.com/sports/m-basebl/sched/cal-grand-basebl-sched.html') Warning message: In readLines("http://calbears.cstv.com/sports/g-basebl/sched/cal-m-basebl-sched.html") : incomplete last line institute on 'http://calbears.cstv.com/sports/m-basebl/sched/cal-one thousand-basebl-sched.html' The alarm messages simply ways that the last line of the web page didn't contain a newline character. This is really a good thing, since it usually indicates that the page was generated by a program, which generally makes it easier to extract information from it.

Notation: When you're reading a spider web folio, brand a local copy for testing; as a courtesy to the owner of the web site whose pages you're using, don't overload their server by constantly rereading the page. To make a copy from inside of R, look at thedownload.file office. Yous could also save a copy of the issue of usingreadLines, and practice on that until you've got everything working correctly.

Now nosotros have to focus in on what we're trying to excerpt. The first step is finding where it is. If you lot look at the web page, you'll come across that the title "Opponent / Event" is correct to a higher place the data nosotros want. We can locate this line using thegrep function:

> grep('Opponent / Event',thepage) [1] 513 If we look at the lines following this marker, we'll notice that the first appointment on the schedule can be found in line 536, with the other information following later on:

> thepage[536:545] [ane] " <td form=\"row-text\">02/xx/xi</td>" [2] " " [3] " <td form=\"row-text\">vs. Utah</td>" [four] " " [v] " <td class=\"row-text\">Berkeley, Calif.</td>" [6] " " [vii] " <td class=\"row-text\">West, 7-0</td>" [8] " " [9] " </tr>" [10] " "

Based on the previous step, the data that we desire is always preceded past the HTML tag "<td class="row-text»", and followed by "</td>". Let'south grab all the lines that have that pattern:

> mypattern = '<td form="row-text">([^<]*)</td>' > datalines = grep(mypattern,thepage[536:length(thepage)],value=Truthful)

I used value=Truthful, so I wouldn't have to worry about the indexing when I restricted myself to the lines from 550 on. Besides find that I've already tagged the part that I want, in preparation to the final call togsub.

Now that I've got the lines where my data is, I tin applygregexpr, thengetexpr (from the previous lecture), andgsub to extract the data without the HTML tags:

> getexpr = function(south,g)substring(due south,thousand,g+attr(m,'match.length')-ane) > gg = gregexpr(mypattern,datalines) > matches = mapply(getexpr,datalines,gg) > result = gsub(mypattern,'\\1',matches) > names(result) = Goose egg > result[1:10] [i] "02/19/11" "vs. Utah" "Evans Diamond" "1:00 p.m. PT" [five] "02/20/11" "vs. Utah" "Evans Diamond" "1:00 p.k. PT" [9] "02/22/11" "at Stanford"

Information technology seems pretty clear that nosotros've extracted just what we wanted - to go far more usable, we'll catechumen it to a data frame and provide some titles. Since information technology'south hard to describe how to convert a vector to a data frame, nosotros'll utilise a matrix as an intermediate footstep. Since there are four pieces of data (columns) for each game (row), a matrix is a natural pick:

> schedule = as.data.frame(matrix(outcome,ncol=four,byrow=Truthful)) > names(schedule) = c('Date','Opponent','Location','Result') > head(schedule) Appointment Opponent Location Consequence one 02/xix/11 vs. Utah Evans Diamond ane:00 p.m. PT ii 02/20/11 vs. Utah Evans Diamond 1:00 p.m. PT iii 02/22/eleven at Stanford Stanford, Calif. 5:30 p.m. PT 4 02/25/11 at Littoral Carolina Conway, S.C. 4:00 p.m. ET v 02/26/eleven vs. Kansas Country Conway, S.C. 11:00 a.one thousand. ET 6 vs. Due north Carolina State Conway, S.C. xi:30 a.m. ET Some other Instance

At http://www.imdb.com/chart is a box-office summary of the x top movies, along with their gross profits for the current weekend, and their total gross profits. We would like to brand a data frame with that information. As always, the get-go function of the solution is to read the page into R, and use an ballast to discover the part of the data that nosotros want. In this case, the table has column headings, including one for "Rank".

> x = readLines('http://www.imdb.com/chart/') > grep('Rank',ten) [1] 1294 1546 1804 Starting with line 1294 we can expect at the data to see where the information is. A picayune experimentation shows that the useful data starts on line 1310:

> x[1310:1318] [1] " <td class=\"chart_even_row\">" [2] " <a href=\"/title/tt0990407/\">The Green Hornet</a> (2011)" [3] " </td>" [4] " <td class=\"chart_even_row\" way=\"text-align: right; padding-right: 20px\">" [five] " $33.5M" [vi] " </td>" [seven] " <td class=\"chart_even_row\" manner=\"text-align: correct\">" [8] " $40M" [9] " </td>" [10] " </tr>"

At that place are 2 types of lines that contain useful data: the ones with the title, and the ones that begin with some blanks followed by a dollar sign. Hither'south a regular expression that volition pull out both those lines:

> goodlines = '<a href="/title[^>]*>(.*)</a>.*$|^ *\\$' > try = grep(goodlines,x,value=Truthful)

Looking at the beginning oftry, information technology seems like we got what we want:

> try[i:10] [1] " <a href=\"/championship/tt1564367/\">Just Go with It</a> (2011)" [ii] " $30.5M" [3] " $30.5M" [four] " <a href=\"/championship/tt1702443/\">Justin Bieber: Never Say Never</a> (2011)" [5] " $29.5M" [6] " $30.3M" [7] " <a href=\"/championship/tt0377981/\">Gnomeo & Juliet</a> (2011)" [viii] " $25.4M" [9] " $25.4M" [10] " <a href=\"/title/tt1034389/\">The Eagle</a> (2011)"

Sometimes the trickiest function of getting the data off a webpage is figuring out exactly the function you lot demand. In this instance, at that place is a lot of inapplicable information later the tabular array we desire. By examining the output, nosotros can run across that nosotros merely desire the offset 30 entries. We also need to remove the extra information from the title line. Nosotros tin can apply thesub function with a modified version of our regular expression:

> try = try[1:30] > endeavor = sub('<a href="/title[^>]*>(.*)</a>.*$','\\i',attempt) > head(try) [1] " Just Go with Information technology" [2] " $xxx.5M" [3] " $thirty.5M" [iv] " Justin Bieber: Never Say Never" [v] " $29.5M" [6] " $30.3M" Once the spaces at the first of each line are removed, nosotros tin rearrange the data into a 3-column data frame:

> endeavor = sub('^ *','',try) > movies = data.frame(matrix(try,ncol=3,byrow=True)) > names(movies) = c('Name','Wkend Gross','Total Gross') > head(movies) Name Wkend Gross Full Gross 1 But Go with It $xxx.5M $30.5M 2 Justin Bieber: Never Say Never $29.5M $30.3M 3 Gnomeo & Juliet $25.4M $25.4M 4 The Eagle $8.68M $8.68M 5 The Roommate $8.13M $25.8M 6 The King'due south Speech $7.23M $93.7M We tin can supersede the special characters with the following code:

> movies$Name = sub('&','&',movies$Proper name) > movies$Name = sub(''','\'',movies$Name) Dynamic Web Pages

While reading data from static web pages as in the previous examples can be very useful (particularly if y'all're extracting data from many pages), the real power of techniques similar this has to do with dynamic pages, which have queries from users and return results based on those queries. For example, an enormous amount of information almost genes and proteins tin can be found at the National Middle of Biotechnology Data website (http://world wide web.ncbi.nlm.nih.gov/), much of it available through query forms. If you're just performing a few queries, information technology's no problem using the web folio, simply for many queries, it's beneficial to automate the procedure.

Hither is a unproblematic instance that illustrate the concept of accessing dynamic information from web pages. The page http://finance.yahoo.com provides information virtually stocks; if yous enter a stock symbol on the page, (for instanceaapl for Apple Computer), you will be directed to a page whose URL (equally information technology appears in the browser accost bar) is

http://finance.yahoo.com/q?southward=aapl&ten=0&y=0

The fashion that stock symbols are mapped to this URL is pretty obvious. We'll write an R function that volition extract the current price of whatsoever stock we're interested in.

The first pace in working with a page like this is to download a local re-create to play with, and to read the folio into a vector of character strings:

> download.file('http://finance.yahoo.com/q?s=aapl&x=0&y=0','quote.html') trying URL 'http://finance.yahoo.com/q?south=aapl&x=0&y=0' Content type 'text/html; charset=utf-eight' length unknown opened URL .......... .......... .......... ......... downloaded 39Kb > 10 = readLines('quote.html') To get a experience for what we're looking for, find that the words "Last Merchandise:" announced before the current quote. Let's look at the line containing this string:

> grep('Last Trade:',10) 45 > nchar(x[45]) [1] 3587 Since there are over 3500 characters in the line, we don't want to view it direct. Let's usegregexpr to narrow down the search:

> gregexpr('Terminal Merchandise:',ten[45]) [[1]] [i] 3125 attr(,"match.length") [one] xi This shows that the string "Terminal Trade:" starts at graphic symbol 3125. Nosotros tin can utilizesubstr to encounter the relevant office of the line:

> substring(x[45],3125,3220) [1] "Last Trade:</th><td class=\"yfnc_tabledata1\"><big><b><span id=\"yfs_l10_aapl\">363.l</span></b></b"

There's plenty of context - we want the office surrounded past<large><b><span ... and</bridge>. One easy way to grab that office is to use a tagged regular expression withgsub:

> gsub('^.*<big><b><span [^>]*>([^<]*)</bridge>.*$','\\1',10[45]) [i] "363.fifty" This suggests the following function:

> getquote = function(sym){ + baseurl = 'http://finance.yahoo.com/q?s=' + myurl = paste(baseurl,sym,'&ten=0&y=0',sep='') + 10 = readLines(myurl) + q = gsub('^.*<big><b><span [^>]*>([^<]*)</span>.*$','\\1',grep('Last Merchandise:',x,value=TRUE)) + every bit.numeric(q) +} As always, functions like this should be tested:

> getquote('aapl') [1] 196.xix > getquote('ibm') [ane] 123.21 > getquote('nok') [1] 13.35 These functions provide only a single quote; a niggling exploration of the yahoo finance website shows that we tin can get CSV files with historical data past using a URL of the form:

http://ichart.finance.yahoo.com/table.csv?s=30

wherethirty is the symbol of interest. Since it's a comma-separated file, Nosotros can useread.csv to read the chart.

gethistory = role(symbol) read.csv(paste('http://ichart.finance.yahoo.com/table.csv?s=',symbol,sep='')) Hither's a simple test:

> aapl = gethistory('aapl') > head(aapl) Engagement Open High Low Close Volume Adj.Shut 1 2011-02-15 359.xix 359.97 357.55 359.90 10126300 359.90 2 2011-02-14 356.79 359.48 356.71 359.18 11073100 359.18 3 2011-02-11 354.75 357.80 353.54 356.85 13114400 356.85 4 2011-02-10 357.39 360.00 348.00 354.54 33126100 354.54 v 2011-02-09 355.19 359.00 354.87 358.16 17222400 358.16 six 2011-02-08 353.68 355.52 352.15 355.20 13579500 355.xx Unfortunately, if nosotros effort to employ theEngagement cavalcade in plots, it will not piece of work properly, since R has stored it every bit a factor. The format of the date is the default for theas.Date function, and so we tin can modify our function every bit follows:

gethistory = function(symbol){ data = read.csv(paste('http://ichart.finance.yahoo.com/table.csv?due south=',symbol,sep='')) information$Appointment = as.Date(data$Date) data } Now, we tin produce plots with no problems:

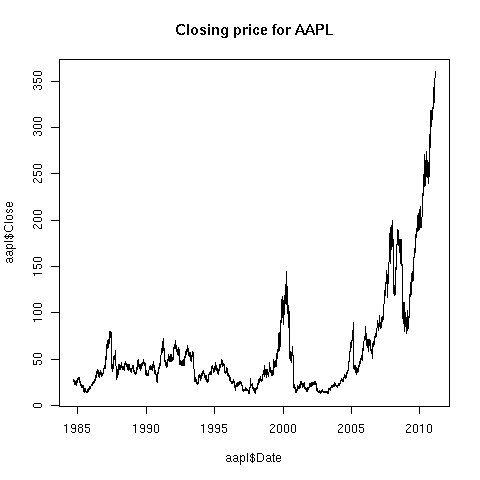

> aapl = gethistory('aapl') > plot(aapl$Date,aapl$Close,master='Endmost price for AAPL',type='l') The plot is shown below:

cooperhichislon75.blogspot.com

Source: https://statistics.berkeley.edu/computing/faqs/reading-web-pages-r

0 Response to "How to Read a Stirng of Html Into R"

Enviar um comentário